Since GNN operators take in multiple input. A tensor in PyTorch can be normalized using the normalize() function provided. Nn. Module: rAn extension of the :class:torch.nn.Sequential container in order to define a sequential GNN model. Self.fc1 = nn.Linear(flattened_dim+obs_dim,20)Īnd I’d like to convert it to something like this: work = nn.Sequential( ResNet50() CatfishModel.fc nn.Sequential(nn.Linear(transfermodel.fc.infeatures,500), nn.ReLU(), nn.Dropout(), nn.Linear(500,2)) Note that we do not. Here is the original neural net: nv1 = nn.Conv2d(in_channels=in_dim, out_channels=1, kernel_size=(1, 1), stride=1)

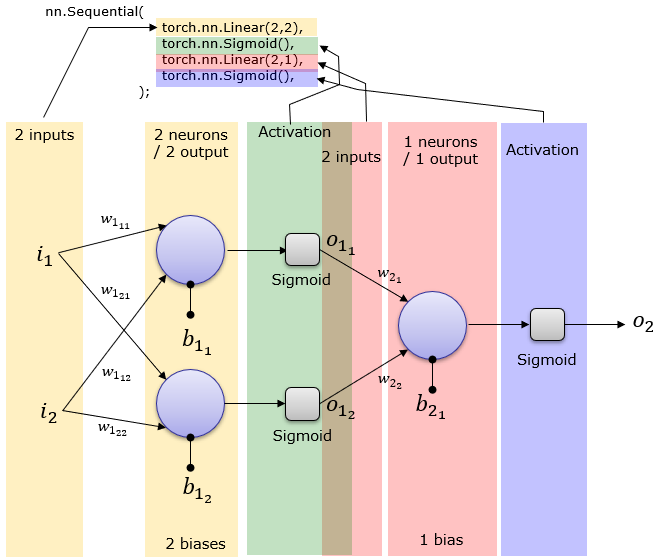

Pytorch nn sequential how to#

I know how to do everything in nn.Sequential except for the concatenation. Of the \((i+1)\)-th module in the sequential.Hi how’s it going? I have a simple neural net that takes in a convolutional layer, flattens the output, concats another observation to the output, and then passes it through two linear layers. The output of the \(i\)-th module should match the input Graph ( DGLGraph or list of DGLGraphs) – The graph(s) to apply modules on. rand ( 32, 4 ) > net (, n_feat ) tensor(,, , ]) forward ( graph, *feats ) ¶ erdos_renyi_graph ( 8, 0.8 )) > net = Sequential ( ExampleLayer (), ExampleLayer (), ExampleLayer ()) > n_feat = torch.

at python> resnet50 models.resnet50(pretrainedTrue) fcinputs resnet50.fc.infeatures fcinputs: 2048 resnet50-> fc nn. challenges Morphological Distance Transform on Pytorch This is a tutorial on training a sequence-to-sequence model that uses the nn Once the transforms. erdos_renyi_graph ( 16, 0.2 )) > g3 = dgl. I want to use the pretrained resnet50 for transfer-learning and fine-tuning at C++. _init_ () > def forward ( self, graph, n_feat ): > with graph. Module ): > def _init_ ( self ): > super (). In this case, we would prefer to write the module with a class, and let nn.Sequential only for very simple functions. > import torch > import dgl > import torch.nn as nn > import dgl.function as fn > import networkx as nx > from dgl.nn.pytorch import Sequential > class ExampleLayer ( nn. Mode 2: sequentially apply GNN modules on different graphs The results: Epoch 0/1 train Loss: 0.0004 Acc: 0.9999 val Loss: 0.0000 Acc: 1.0000 Create model class CNN(nn.Modu. add_edges (, ) > net = Sequential ( ExampleLayer (), ExampleLayer (), ExampleLayer ()) > n_feat = torch. My dataset is 224224 and i have trained a model for genderclassification topic. cant go inside the nn.Sequential object and initialise the weight for its members. edata > return n_feat, e_feat > g = dgl. In PyTorch, layers are often implemented as either one of torch.nn. python deep-learning pytorch conv-neural-network classification Share. _init_ () > def forward ( self, graph, n_feat, e_feat ): > with graph. 2 days ago &0183 &32 The results: Epoch 0/1 train Loss: 0.0004 Acc: 0.9999 val Loss: 0.0000 Acc: 1.0000 Create model class CNN(nn.Modu. Module ): > def _init_ ( self ): > super ().

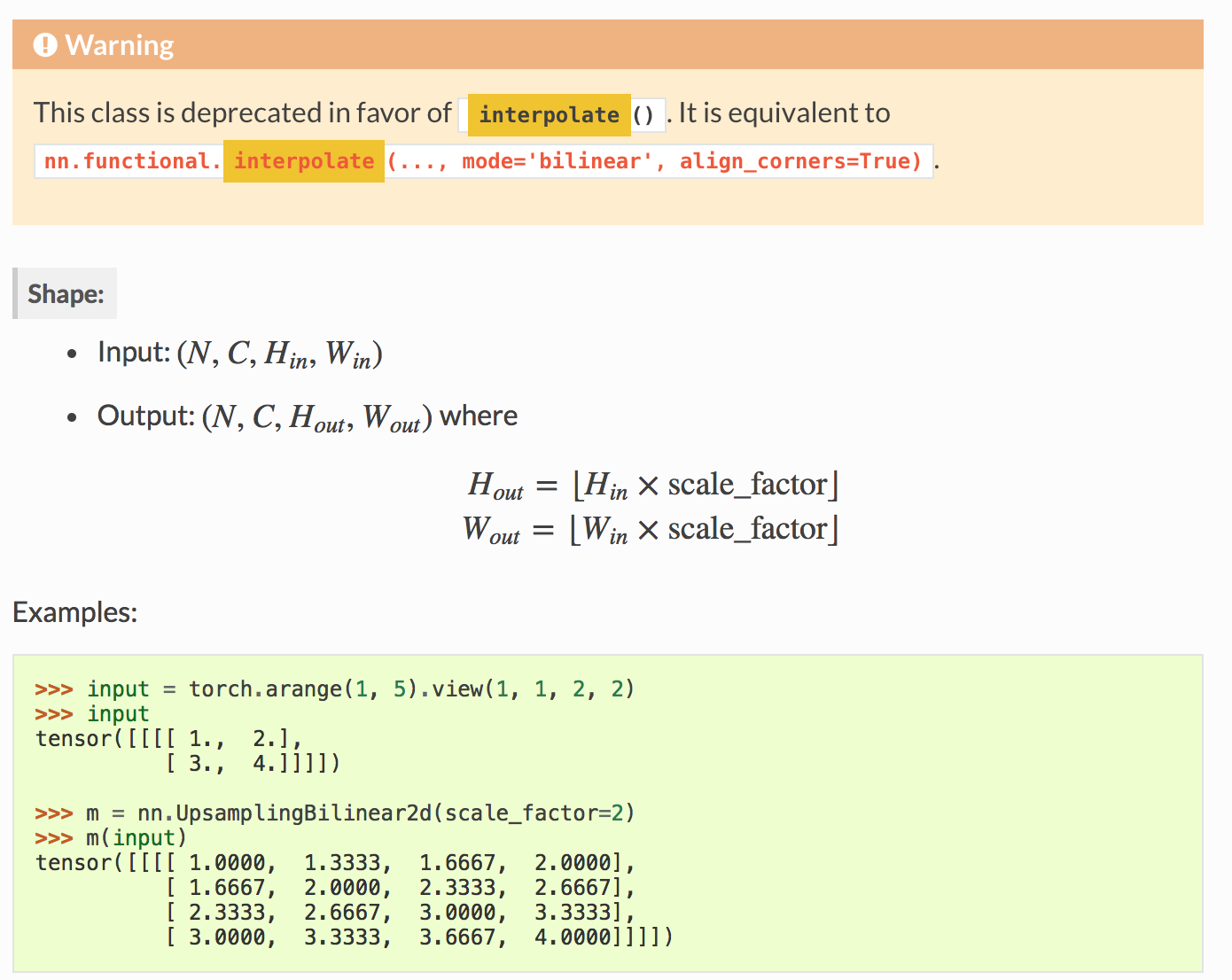

Syntax: torch.nn.Dropout (p0. before moving further let’s see the syntax of the given method. This method only supports the non-complex-valued inputs. > import torch > import dgl > import torch.nn as nn > import dgl.function as fn > from dgl.nn.pytorch import Sequential > class ExampleLayer ( nn. In PyTorch, torch.nn.Dropout () method randomly replaced some of the elements of an input tensor by 0 with a given probability. Score Modules for Link Prediction and Knowledge Graph Completion.

0 kommentar(er)

0 kommentar(er)